By: Dawn Zoldi

At GBEF EDGE 2026 in Las Vegas, Verizon Frontline turned a tech demo into a live proof of concept for what mission success looks like when autonomy, AI and resilient networks work as one system. On stage, a 5G‑connected “Robotic Emergency Dog” (RED), an off‑road comms truck and a rapid‑response satellite trailer set the scene for a panel on “Driving Mission Success Through Next Gen Innovation” with leaders from Verizon, ECS Federal and Lockheed Martin.

From Curbside Stream to Center Stage

Verizon Frontline Associate Director Matt Brungardt opened by reminding the audience that last year’s session was literally staged outside in the cold, streaming indoors, a metaphor for how far the tech and the mission have moved in a year. This time, the network, the robots and the response team were front and center, framed not as gadgets but as infrastructure capable of helping enable life‑or‑death decisions in the field.

Brungardt’s team, the Verizon Frontline Crisis Response Team, runs as a 24/7/365 national operation activated by a single 800 number, with support provided at no cost to public safety agencies, regardless of bandwidth consumed or hardware deployed. In 2024 alone, they responded to roughly 1,500 requests for support, delivered nearly 10,000 communication solutions and supported over 700 agencies, from small local departments to large state and federal customers.

Verizon treats resilient communications as an autonomous capability in its own right, not just as a utility. The “platform” here is the network edge where drones, ground robots, AI engines and human operators converge.

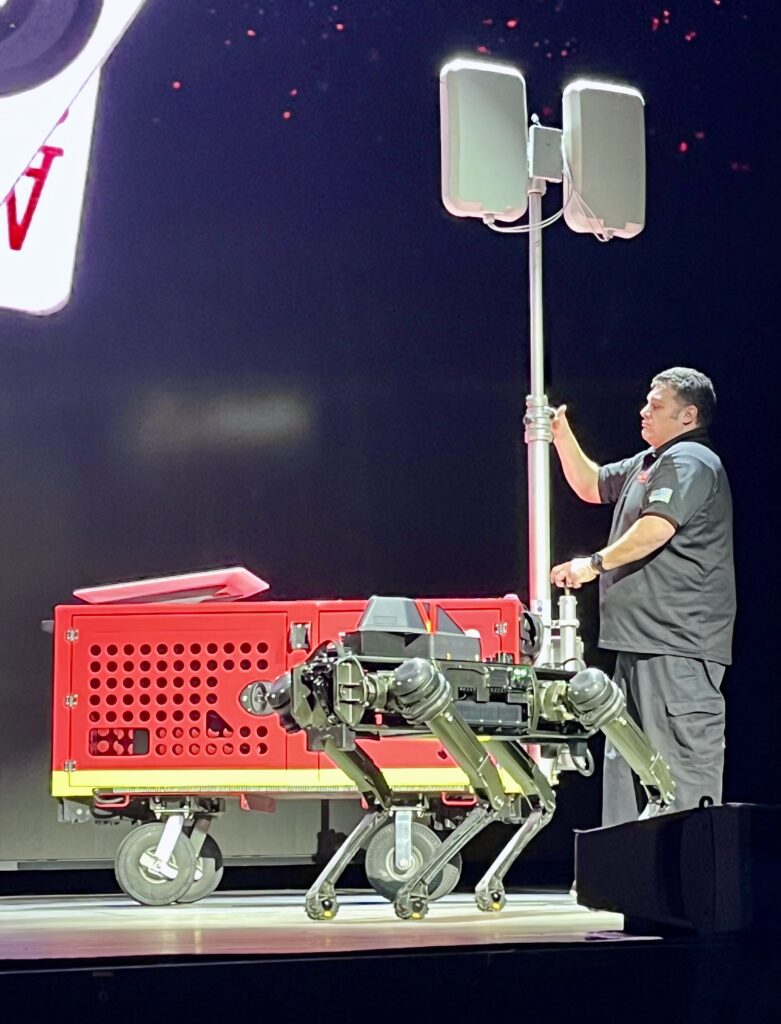

RED, the Robotic Emergency Dog

Behind Brungardt on stage were three physical embodiments of that edge: a compact rapid‑response connectivity unit, an off‑road 5G vehicle and RED, the Robotic Emergency Dog. The RED quadruped is a configurable sensor and comms platform designed to answer a hard public safety question: “Is it safe to send people downrange?” The robot carries a battery in its underside to power environmental sensors mounted on top. This enables hazmat readings, air‑quality checks and other safety assessments before human entry.

Initially, RED’s link back to the network ran through a 5G router mounted on its back. Over time, Verizon’s innovation program has iterated on that concept, treating RED as a testbed for integrating robotics, connectivity, and sensing in one mobile package. The team has exercised the robot with partners in scenarios ranging from tornadoes to flooding to wildfires, all focused on giving on-scene commanders better situational awareness without putting responders at unnecessary risk.

In autonomy terms, RED is a mobile, semi‑autonomous edge node. It’s a robot that brings the network, the data and the sensing to where the risk is highest. For public safety agencies exploring unmanned systems, it demonstrates how robotics can be directly tied to mission critical communications and decision‑support workflows.

Satellites, Tethered Drones and Off‑Road 5G

Brungardt’s team also showcased a compact rapid response connectivity trailer that can be hitched, hauled and deployed in minutes. Inside, a low Earth orbit (LEO) satellite link backhauls connectivity to a device that stands up Wi‑Fi plus 4G and 5G, with onboard power that can run for three to four days and environmental control to protect the electronics. The unit can be dropped into hurricane zones, flood‑damaged areas or other disconnected environments to create localized “bubbles of coverage” for responders.

Mast antennas extend to roughly 16 feet, providing quick, portable elevation for the radios and improving coverage across complex terrain. The form factor responds directly to agency feedback. It’s small enough for multiple units in a single trailer, cheap enough to proliferate and simple enough for rapid setup.

Those ground assets are complemented by airborne autonomy. On a table in the anteroom, Verizon displayed a tethered drone capable of carrying a payload to provide overhead coverage and imagery. That drone serves dual roles as a network relay that can “push” connectivity over muddy or inaccessible ground, and a photogrammetry platform for high‑resolution imagery in disaster zones or major planned events.

The off‑road vehicle extends this concept even further, serving as a rolling command and communications hub. On its roof, a LEO satellite backup and a 5G router uses network slicing to provide first responders with dedicated network resources. Inside, it has the ability to run private networks entirely separated from commercial traffic when agencies need dedicated, mission‑specific connectivity.

AI as the Engine of Mission Cognition

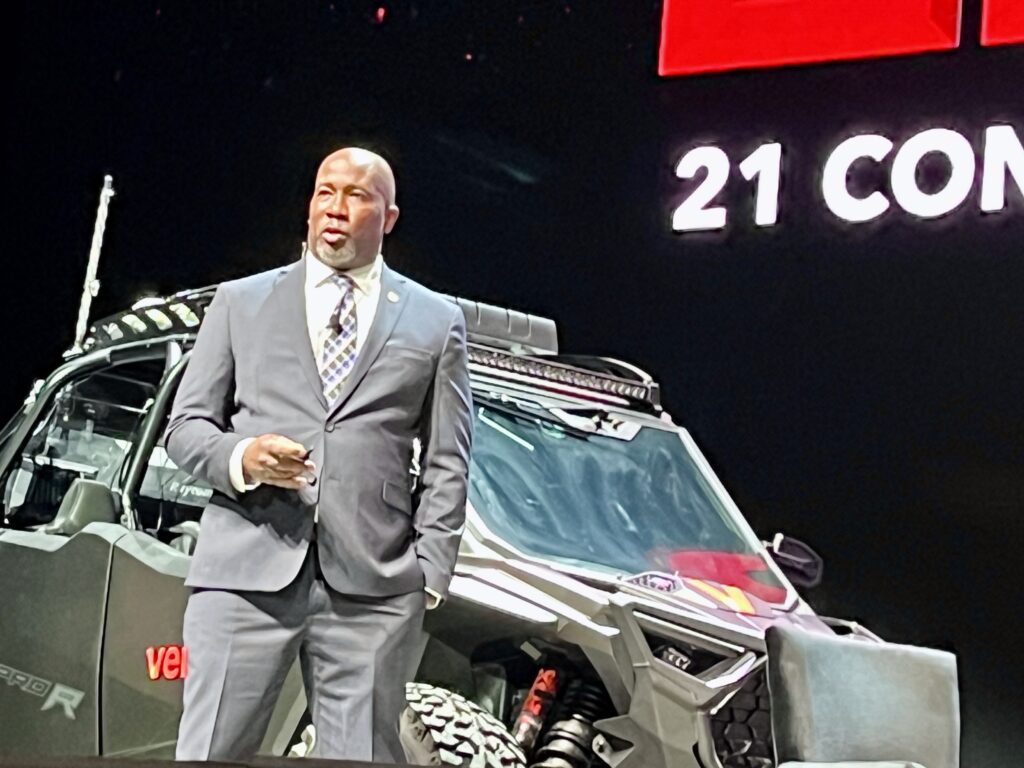

After the tech preview, Verizon’s Lamont Copeland took the conversation up a layer, by focusing on how AI and networks intersect to drive “mission cognition” for public safety. None of the tech, including AI, that agencies want to use, whether for video analytics, predictive modeling or logistics, works without a network that can move data between sensors, applications, and operators in near real time.

Copeland cited survey data showing that about 12% of public safety professionals report using AI regularly today, but said to expect that figure to rise toward 46% in the next five years as AI becomes a top operational priority. That shift, he argued, requires tightly integrated wireline and wireless networks that can carry mission data from the field to AI engines and then back out to frontline personnel.

To explore how that integration plays out in practice, Copeland brought two partners on stage: Amir Stephenson, Director of 5G.MIL® Programs at Lockheed Martin and Joshua Gough, Director of Mission Capability at ECS Federal and a former CBP agent with 27 years of experience.

Stephenson framed Lockheed’s work as targeting two core problems: decision speed and decision quality in environments where data volumes, complexity and urgency are all increasing. Through the 5G.MIL program, Lockheed and Verizon are blending commercial and private networks with military‑grade systems to deliver secure, resilient communications for defense and public sector customers. As one example, stakeholders can use a commercial and/or private 5G network to detect and track intrusions into a protected space without deploying bespoke sensor grids. By treating the communications infrastructure itself as a sensing layer, the partners showed they could achieve integrated sensing and communications (ISAC) functions on a much faster timeline and at lower cost.

Gough, drawing on his DHS experience, described a government struggling under “decision latency” in a “data‑saturated environment,” citing CBP alone as sitting on more than 20–30 petabytes of data. Historically, agencies built dashboards and APIs that stopped at the “information layer.” They knew the numbers (for example, entries up 15% or down 50%) but often could not determine what those swings meant in operational terms. From his perspective, the next frontier is moving from raw information to true knowledge:

- Applying AI to attach meaning to enriched data, turning statistics into context that can drive action.

- Using AI to “scale cognition,” enabling systems that do more than store and display where it interprets, reasons and proposes courses of action across domains.

The connective tissue between autonomy and AI rests in the ability to fuse sensor data from unmanned platforms, mobile devices and fixed systems into a shared operational picture that humans can understand and trust at tempo.

Human in the Loop, Institutional Cognition at Scale

However, both Stephenson and Gough emphasized that this is not about handing the mission over to machines. Lockheed, in particular, has anchored its 5G.MIL and autonomous platform work in “human‑in‑the‑loop” or “human‑on‑the‑loop” constructs, where AI generates courses of action at machine speed, but human decision‑makers remain responsible for choosing and authorizing responses

Stephenson outlined two near‑term trajectories:

- Trusted AI at scale: Moving beyond “shiny object” pilots toward mission‑embedded AI tuned to specific functions such as command and control, logistics or cyber defense.

- Expanded human‑machine teaming: From fully simulated autonomous platforms to AI co‑pilots sitting alongside analysts, operators and caseworkers as “trusted agents” in day‑to‑day workflows

Gough projected that within five years, the government will shift from scattered AI tools to mission‑level, enterprise‑grade AI, provided solutions meet stringent standards for security, legality, privacy and ethics. He sees one of the most powerful outcomes as “federated reasoning across domains,” which will allow components like CBP, ICE, and other DHS elements, and eventually DoD and beyond, to reason collectively over their combined data holdings. He described this future state as “institutional cognition,” a distributed capability that helps senior leaders, analysts and operators understand patterns, non‑obvious relationships, and weak signals that would otherwise be invisible. In the public safety context, that could mean:

- Identifying unknown individuals or networks of interest from massive digital forensics datasets.

- Predicting where cargo, vessels or people of concern are likely to be based on telemetry and historical patterns, allowing pre‑positioning of scarce resources.

- Surfacing subtle but critical indicators—“weak signals”—that warrant human review before they become major incidents.

Both speakers stressed that government AI adoption remains cautious and risk‑aware, with most activity living in “pockets of excellence” and pilots rather than institution‑wide deployments. That caution is not slowing the pressure to operationalize AI, but it is shaping the standards and architectures vendors must meet.

What It Means for Autonomy and Public Safety

By the end of the session, Copeland summed up the journey ahead as a multi‑year partnership between carriers, primes, integrators and government customers to “enable more of the services and capabilities necessary” for mission success. In five years, he suggested, stages like the one at GBEF EDGE will likely be filled with “cognitive toys,” AI‑enabled, autonomous systems deeply integrated into public safety and national security operations.

For the autonomy ecosystem, several themes from this Verizon‑led conversation stand out:

- Network‑centric autonomy: Robots, drones and unmanned platforms only deliver their full value when coupled to resilient, flexible networks via LEO satellites, 5G connectivity, private wireless networks and portable edge units.

- AI as mission interpreter: Autonomy generates data; AI gives that data meaning by turning sensor feeds into situational awareness and courses of action that humans can trust.

- Human‑machine teaming as default: Whether it is RED padding into a hazmat scene or an AI agent co‑piloting a digital forensics analyst, autonomy in public safety will be designed around keeping humans in control while expanding their reach.

- Institutional cognition over time: As agencies federate reasoning across domains, autonomous systems will feed into a larger, cross‑enterprise brain trust that can see threats and opportunities earlier and act faster.

At GBEF EDGE, while RED’s movements drew the cameras, the real story is less about any particular device and more about an emerging architecture. We are moving into a world where autonomous platforms, AI engines, and resilient networks combine to make the network itself a first responder.