By: John Crissman, CNA

Have you ever wondered how artificial intelligence (AI), machine learning (ML) and the Internet of Things (IoT) can transform the way first responders handle emergencies to potentially save countless lives? Read on to find out how CNA, a not-for-profit analytical organization with deep experience in emergency management, data analytics and artificial intelligence to support government agencies, teamed up with RIIS (Research Into Internet Systems) to develop First Responder Awareness Monitoring during Emergencies (FRAME™) for the AI for IoT Information (AI3) Prize Competition, for the win! That win, however, is just the start of an ongoing effort to improve this key tool and, in the end, improve both public safety and emergency response. Read on to learn about the journey, the tech and the future of it all.

Starting FRAME

In January of 2023, I was part of the CNA-RIIS team of engineers that started developing FRAME™ for the AI for IoT Information (AI3) Prize Competition. Hosted by Texas A&M University, Texas A&M Engineering Extension Service and the National Institute of Standards and Technology (NIST), the competition challenged teams to develop AI solutions to help first responders leverage data from IoT devices, smart buildings and other sources to improve situational awareness and response times by addressing the data flood created through the use of advanced technologies.

The FRAME concept is an integrated system that enhances the capabilities of first responders. During emergency responses, it efficiently acquires, processes and presents data from various sources to improve situational awareness and decision-making. It ultimately provides increased safety to first responders and impacted citizens.

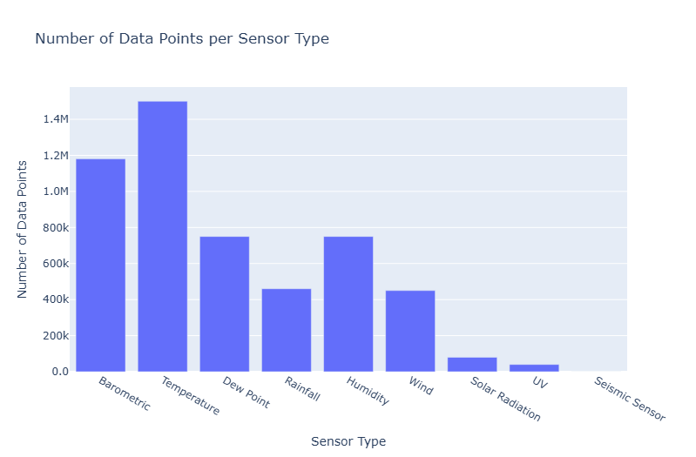

The ML model we developed for the initial FRAME prototype and for the AI3 Prize Competition analyzes data patterns from IoT sensor readings to automatically determine the type of device, even when explicit identification information is missing. After we researched literature for best practices on using ML on IoT data in this ever-evolving field, and specifically for detecting the type of device the data came from, our team decided to use TensorFlow’s Sequential neural network. We made this decision based on Sequential’s flexibility in handling diverse IoT sensor data, strong community support and robust performance. All of this made it ideal to develop the FRAME prototype. We trained the ML model on data from the nine different IoT sensor types shown in the chart (included here), and ended up with a validation accuracy fluctuating at a little over 80 percent.

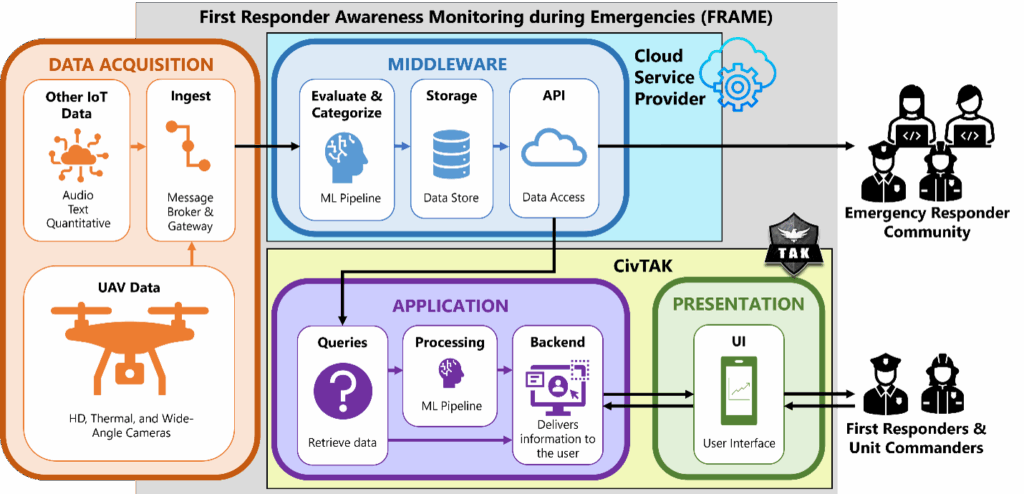

This ML model is part of the ML Pipeline in the Middleware Component of FRAME. Here’s a breakdown of the different Components:

- Data Acquisition: collects and categorizes data from various sources, including IoT sensors and crowd-sourced information.

- Middleware: processes this data through the ML pipeline and stores the data for later use.

- Application: generates actionable insights and alerts by leveraging processed data with tools like the Team Awareness Kit (TAK), providing features such as geospatial mapping, object detection and sentiment extraction to enhance situational awareness.

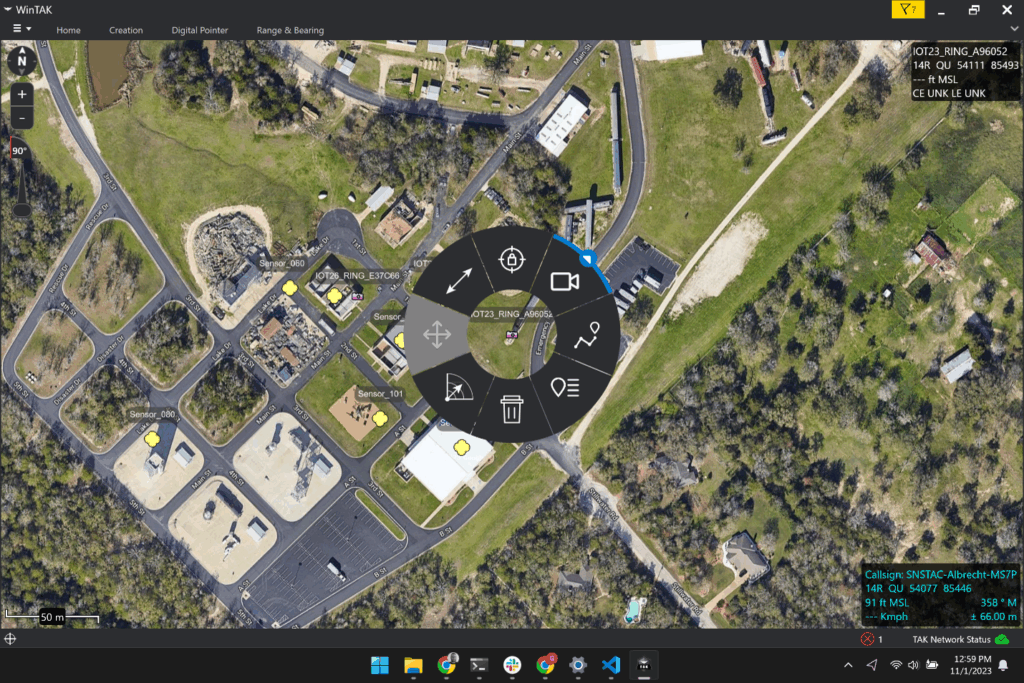

- Presentation: offers an intuitive user interface via Civilian TAK (CivTAK), an open-source, geospatial infrastructure and situational awareness app for smartphones that is used by many federal, state, and local emergency management agencies. It enables first responders and incident commanders to access and visualize critical information through online and offline mapping, collaborative features and high-resolution imagery.

Disaster City

n November of 2023, we traveled to Disaster City for the final evaluations of the FRAME prototype in the AI3 Prize Competition. Disaster City is a renowned training facility located in College Station, Texas. It is part of Texas A&M Engineering Extension Service, situated near the Texas A&M University campus. During our stay in Disaster City, we gave a presentation on FRAME along with a demonstration of FRAME’s ability to ingest data, categorize it with the ML model and display it in the CivTAK client (see image from FRAME user interface). The competition also included a hackathon, in which we had to remotely connect FRAME to the AI3 competition sensors and determine what types of sensors they were.

Later that evening, we found out that we won first place in the AI3 Prize Competition!

Engaging with Industry and Government

Our success in the AI3 Prize Competition opened up many opportunities for our team to engage with others in industry, government and academia.

In May 2024, our team was invited to Interop 2024, hosted by the Texas A&M University Internet2 Technology Evaluation Center (ITEC) Interoperability Institute, where industry leaders gathered to enhance first responder communications through workshops, major incident simulations and technology demonstrations. We participated in a panel discussion on the applicability of FRAME to real-world disaster scenarios and interacted directly with first responders and technology providers to better understand their needs, real-world challenges and technology stacks. Later that summer, we were invited to 5×5: The Public Safety Innovation Summit 2024 in Chicago, hosted by FirstNet Authority and NIST, which focused on the future of public safety communications technology through demonstrations and discussions. The FRAME demonstration received significant attention from the first responder and public safety communications community. We participated in a panel discussion on next steps for public safety technology, including the use of composite AI for tasks such as wildfire tracking, health monitoring of first responders and damage detection, highlighting the potential of advanced technologies in emergency scenarios.

What’s Next?

Looking ahead, the development and enhancement of the FRAME prototype will focus on several key areas to ensure the concept effectively meets the needs of first responders:

- Expanding Application Capabilities: Developing additional features to enhance situational awareness and decision-making during emergencies, such as advanced object detection algorithms and sentiment analysis tools.

- Engaging with Stakeholders: Collaborating with industry leaders, technology providers and regulatory bodies to gather insights and feedback on FRAME’s development.

- Prioritizing First Responder Input: Actively seeking input from the first responder community to understand their priorities and challenges to ensure FRAME evolves to address real-world applications effectively.

By focusing on these next steps, we aim to enhance the functionality of FRAME into a vital tool for improving public safety and emergency response. The journey so far has been both challenging and rewarding. It has deepened our understanding of the critical role technology plays in emergency response and reinforced our excitement for future advancements.