By: Dawn Zoldi

In a CES packed with humanoids, AI assistants and next-gen mobility concepts, one Tokyo startup quietly showed off something far more fundamental: a way to give robots a sense of touch. For the future of autonomy, from factory floors to fulfillment centers, that could be a turning point.

From Lab Research to CES Spotlight

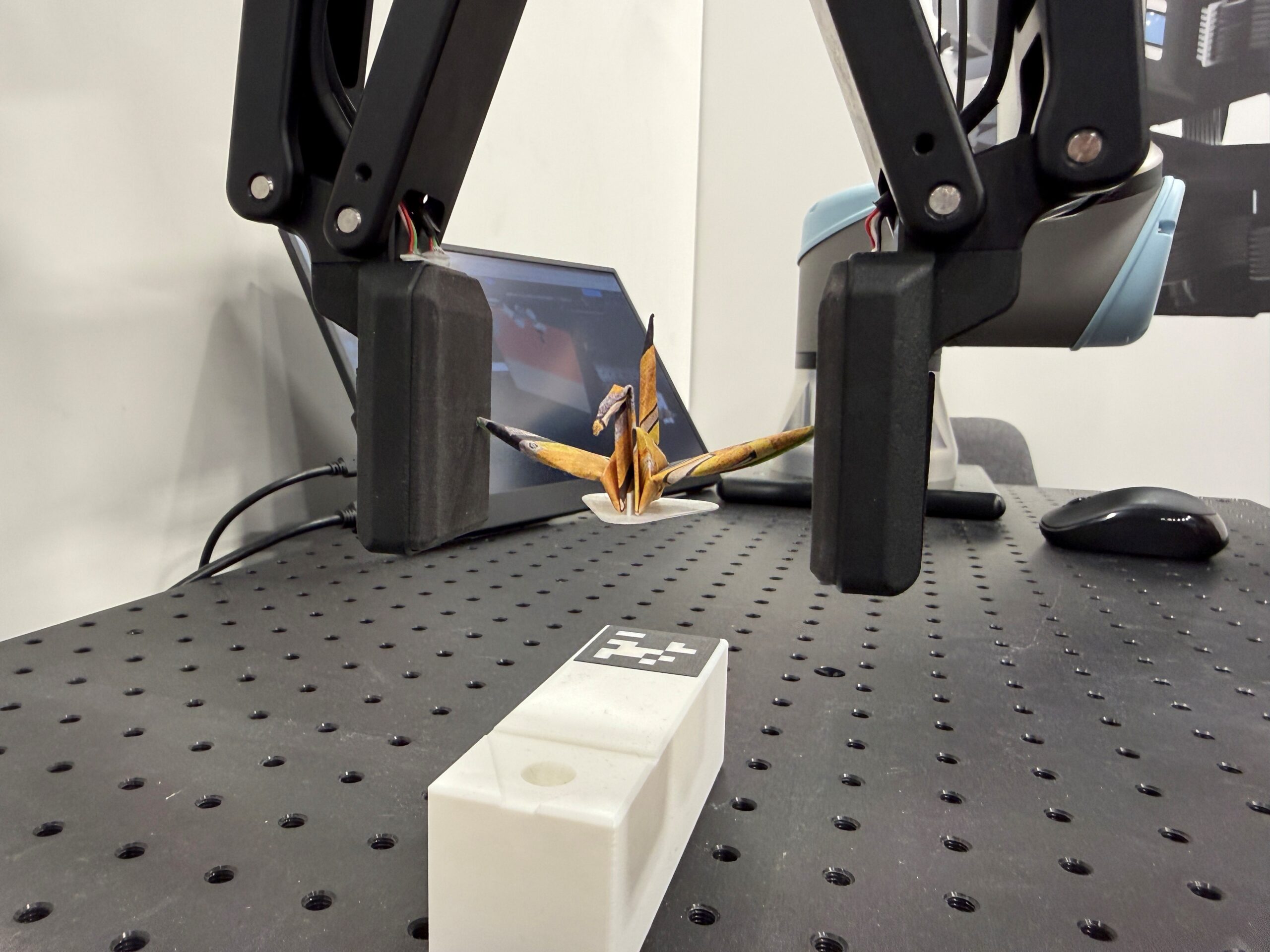

XELA Robotics, a spin-out from Waseda University in Tokyo, has been working on tactile sensing technology since before the company formally launched in 2018. At CES 2026 in Las Vegas, co-founder and CEO Dr. Alexander (Alex) Schmitz used a simple example to explain the problem they are solving: when a robot grasps a cup, it typically cannot tell whether it is empty or full when sealed with a lid. That missing sensitivity limits how robots can safely and reliably interact with the real world. XELA’s answer is a tactile sensor platform designed to give robot hands and grippers a “human sense of touch” by layering rich contact information on top of the vision systems that dominate today’s autonomous workflows.

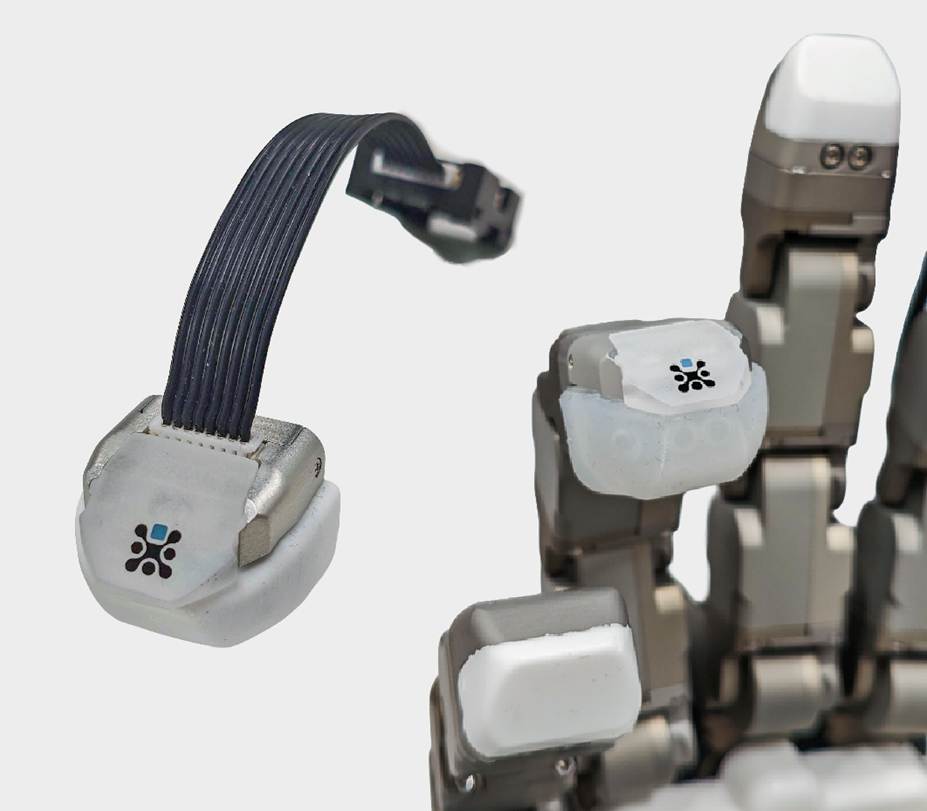

How XELA Gives Robots Touch

Rather than manufacturing their own robotic hands, XELA works as an enabler for the broader robotics ecosystem. Customers choose their preferred robot hand or gripper, and XELA integrates its tactile sensors into that hardware to make it more capable. This approach lets integrators and OEMs upgrade existing platforms without re-architecting entire systems.

The sensors measure properties such as contact location, force and object characteristics like hardness for more nuanced grasp control. That information allows robots to adjust grip strength dynamically, avoid crushing fragile items and detect subtle misalignments that cameras alone often miss. For autonomy developers, the result is a richer perception stack that blends vision, motion and touch into one control loop.

Why Touch Matters for Autonomy

Robots have become faster, cheaper and more precise, but they still struggle with novel or unstructured objects, especially outside tightly controlled environments. Dr. Schmitz argues that when robots encounter something they have never seen before, whether a new consumer product, a unique packaging form factor or a slightly misaligned component, tactile feedback is crucial for reliable manipulation.

This is especially important mixed human–robot environments such as:

- Factory automation: Here, XELA’s sensors support insertion tasks such as placing memory modules onto motherboards, where relying on vision alone leaves too much risk of misalignment and damage

- Warehouse automation: XELA-equipped systems can pick up unfamiliar items, estimate weight and hardness and then automatically modulate grasping force to pack them safely.

By making robots more adaptive and less dependent on rigidly pre-defined object libraries, tactile sensing helps bridge the gap between industrial automation and the more generalized autonomy seen in advanced robotics and mobile platforms.

From Hardware to Full Solutions

While XELA’s origins are in sensor technology, the company is now investing heavily in software to make its tactile data more accessible and actionable. Dr. Schmitz explained that a key goal for 2026 is to provide more complete solutions that orchestrate the robot arm, gripper and tactile sensors in a unified control stack.

The vision is to reduce integration burden so customers can focus on workflows and outcomes rather than low-level control and calibration. By offering application-ready software along with the sensors, XELA aims to compress deployment timelines for automation projects that previously stalled on complex manipulation challenges.

A Wider Stage for Human-Touch Robotics

Although XELA has already participated in more technical and robotics-focused events, CES 2026 marked the company’s first appearance at the world’s biggest consumer technology show. For Dr. Schmitz, it was an opportunity to move tactile sensing from niche robotics research into the mainstream autonomy conversation and reach a wider audience of investors, integrators and potential partners.

XELA wants the world to know that adding touch can unlock classes of tasks that were previously considered too risky or too variable to automate. From delicate electronics assembly to high-throughput e-commerce fulfillment, tactilely aware robots could expand automation into new corners of the global economy. As vision and AI mature, the next wave of autonomy will depend on richer, more human-like sensing modalities to handle complexity at scale. And touch may prove to be one of the most critical pieces of that puzzle.

Dawn Zoldi/P3 Tech Consulting

A live demo of how XELA Robotics enables robotic lifting and placing, here with an origami paper crane without causing any damage.